Automated Simulation Creation from Military

Operations Documents

|

Abstract

The creation of virtual reality simulations

for training or analysis is an arduous process requiring specialized

knowledge. Graphical models and even animated, articulated figures can

now be obtained from websites or hired artists. Even after these assets

are obtained, putting scenes together and authoring character behaviors

can be a lengthy process. Furthermore, ensuring that character behaviors

will be successfully performed in a virtual environment is often a

trial-and-error process. Automating the creation of these behaviors and

facilitating their modification by Subject Matter Experts (SMEs) – as

opposed to technicians – will shorten the time required and reduce

costs.

Sample Input Text (pdf)

Paper (pdf)

Video

Images

| The following examples are used to illustrate what the presented method handles well and where additional refinements may be needed.

In some cases, temporary models are used as placeholders. | |

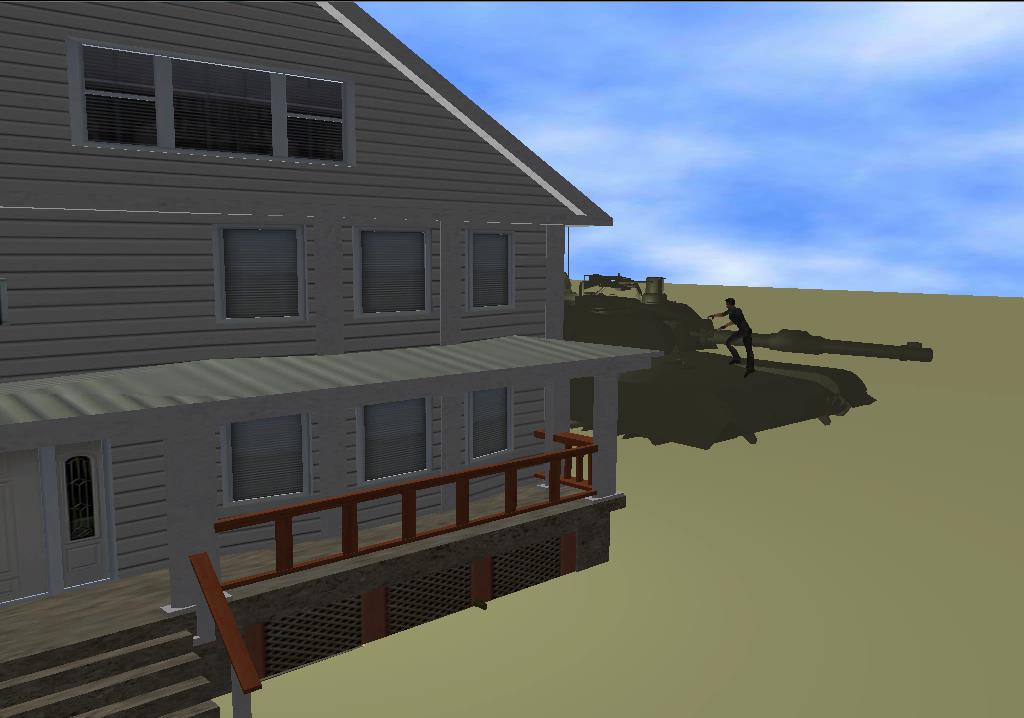

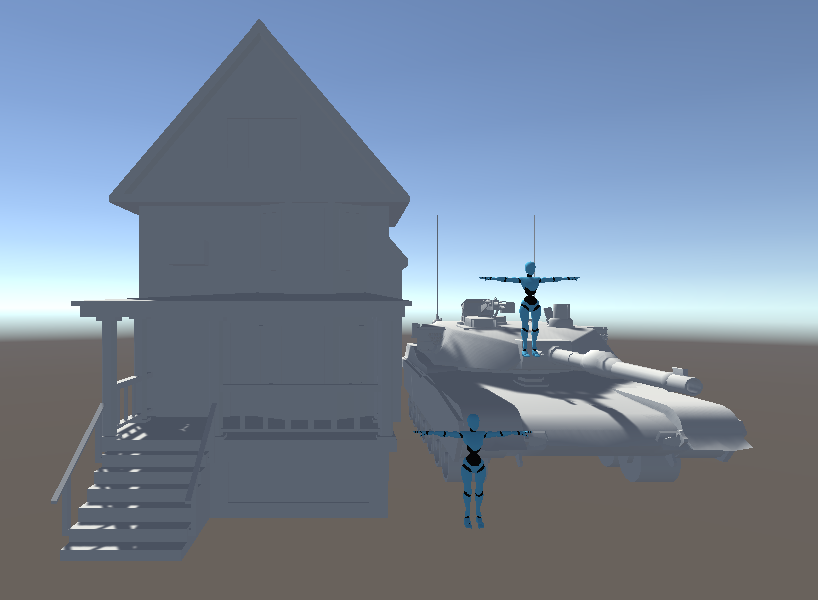

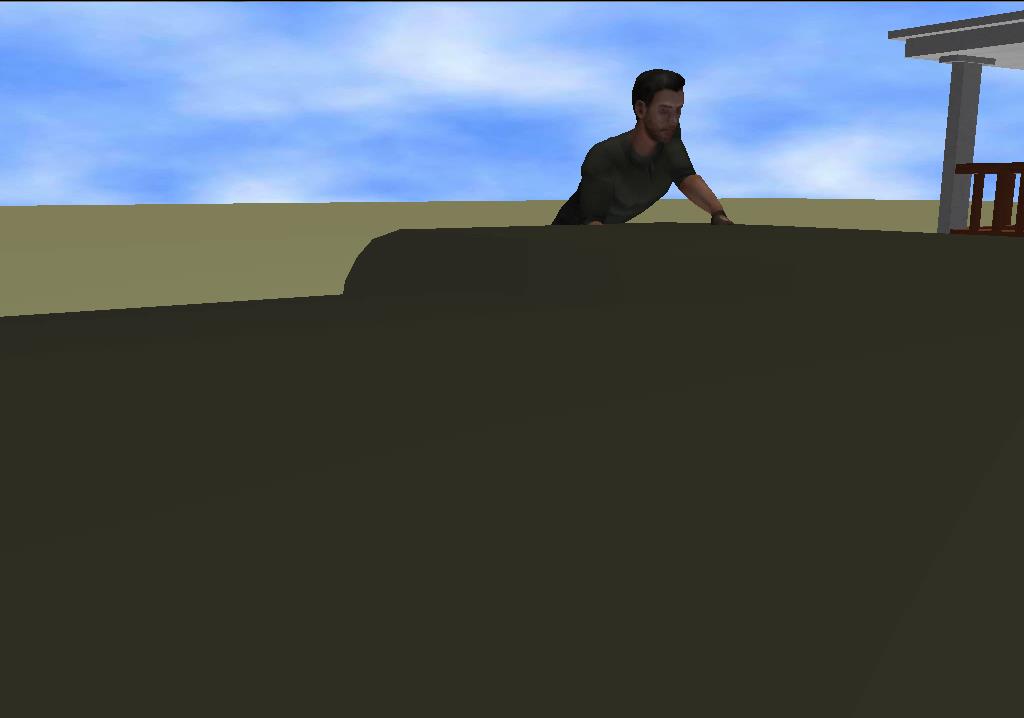

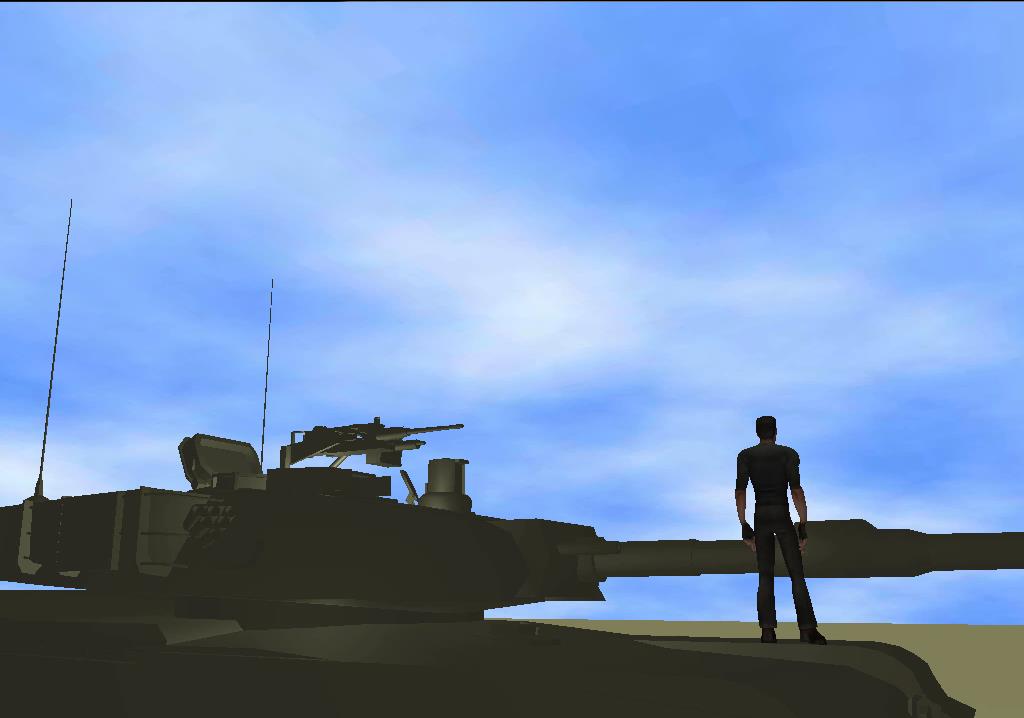

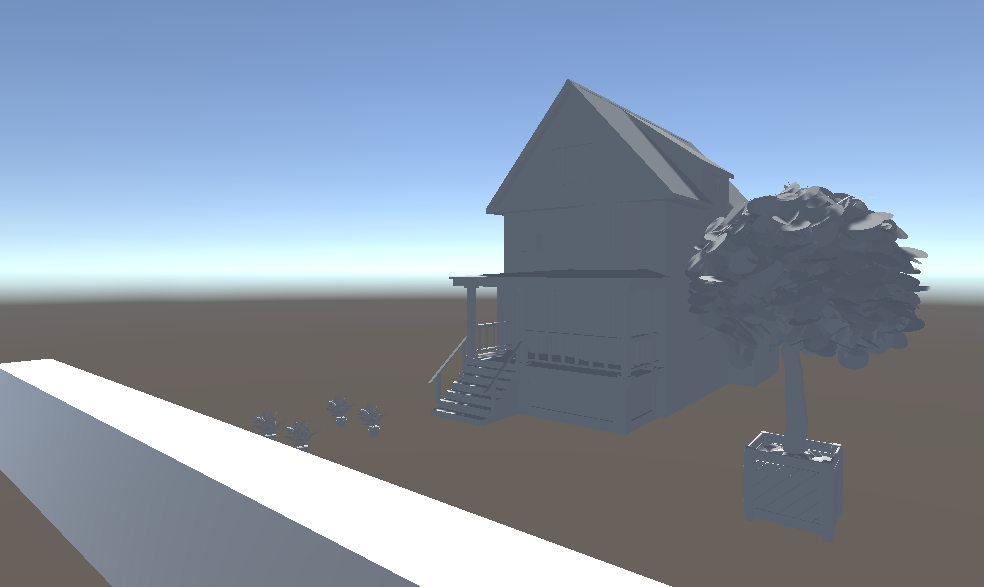

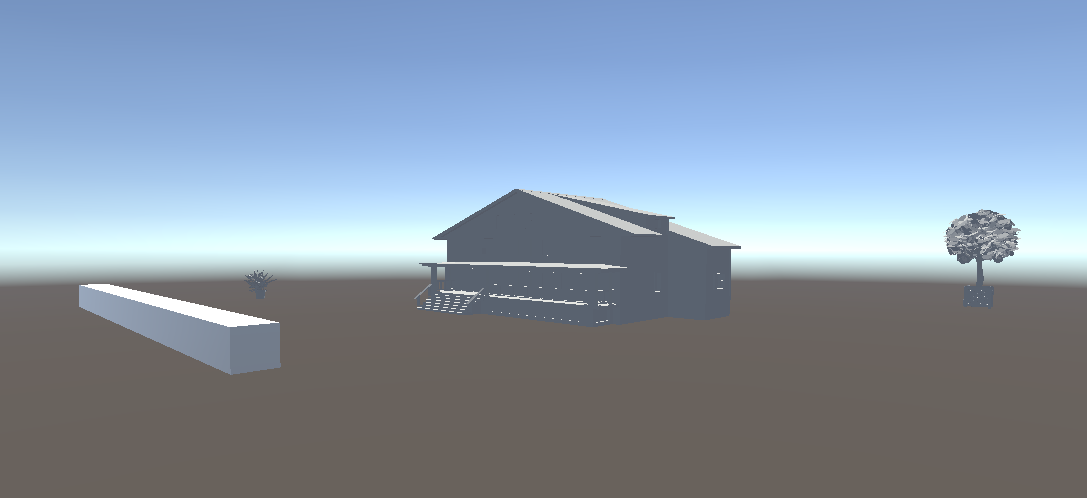

| "Armored vehicles can be positioned next to a building allowing soldiers to use the vehicle as a platform to enter a room or gain access to a roof." | |

Scene created by hand in Unity.

The humanoids represent mark points. Scene created by hand in Unity.

The humanoids represent mark points. |

Scene

created automatically from Natural Language input. Scene

created automatically from Natural Language input. |

Scene

created automatically from Natural Language input. Scene

created automatically from Natural Language input. |

Scene

created automatically from Natural Language input. Scene

created automatically from Natural Language input. |

Scene

created automatically from Natural Language input. Scene

created automatically from Natural Language input. |

Scene

created automatically from Natural Language input. Scene

created automatically from Natural Language input. |

Scene

created automatically from Natural Language input. Scene

created automatically from Natural Language input. |

|

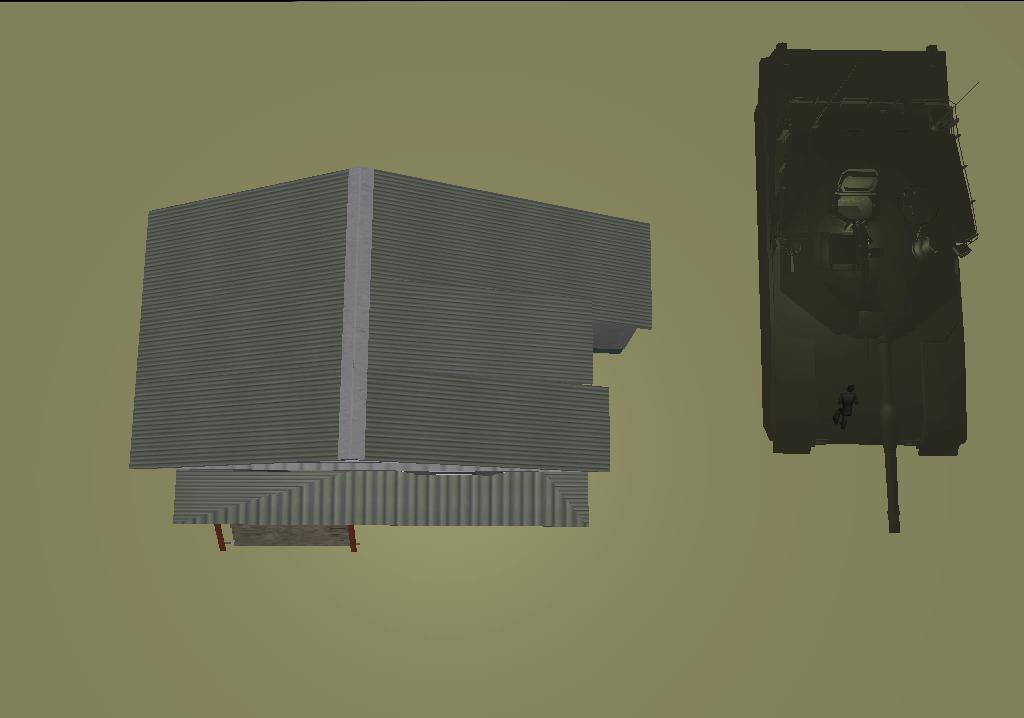

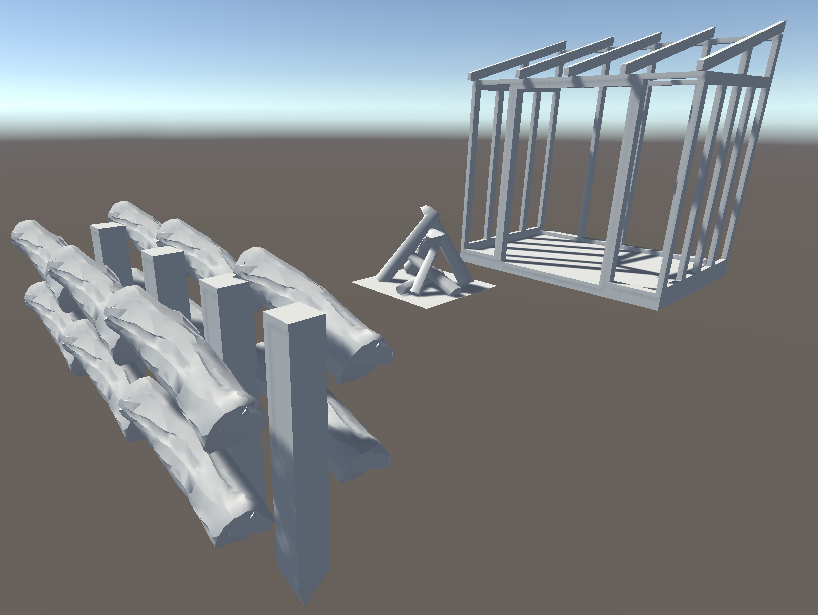

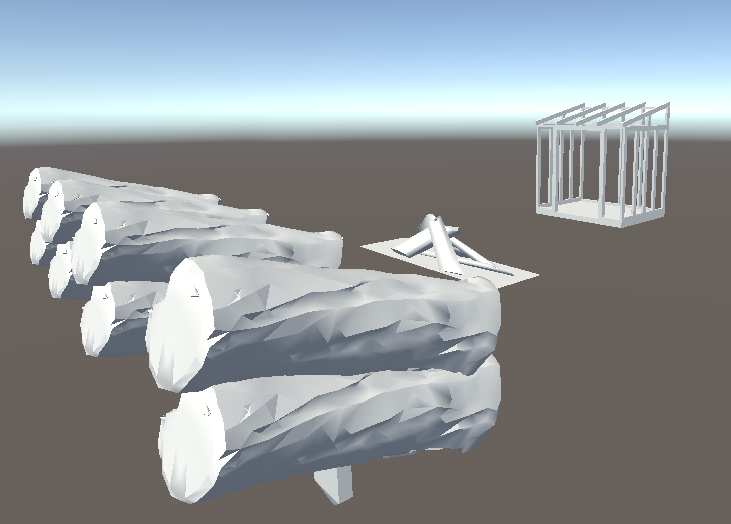

| “In cold weather, add to your lean-to's comfort by building a fire reflector wall (Figure 5-9). Drive four 1.5-meter-long (5-foot-long) stakes into the ground to support the wall. Stack green logs on top of one another between the support stakes. Form two rows of stacked logs to create an inner space within the wall that you can fill with dirt. This action not only strengthens the wall but makes it more heat reflective. Bind the top of the support stakes so that the green logs and dirt will stay in place.” | |

Scene created by hand in Unity.

The lean-to is represented by a simple building frame model. Scene created by hand in Unity.

The lean-to is represented by a simple building frame model. |

Scene created automatically from Natural Language

input.The

lean-to is represented by a simple building frame model. Scene created automatically from Natural Language

input.The

lean-to is represented by a simple building frame model. |

| “This type area is normally contiguous to close-orderly block areas in Europe. The pattern consists of row houses or single-family dwellings with yards, gardens, trees, and fences. Street patterns are normally rectangular or curving.” | |

Scene created by hand in Unity. Scene created by hand in Unity. |

Scene created automatically from Natural Language input.

|

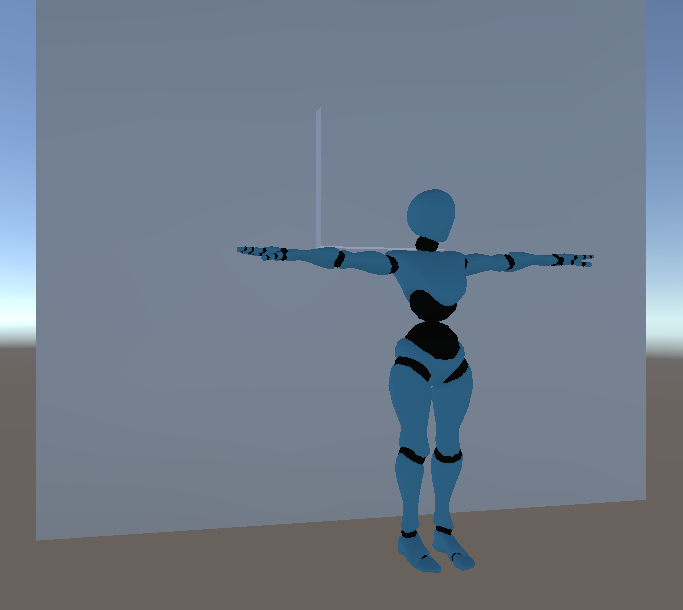

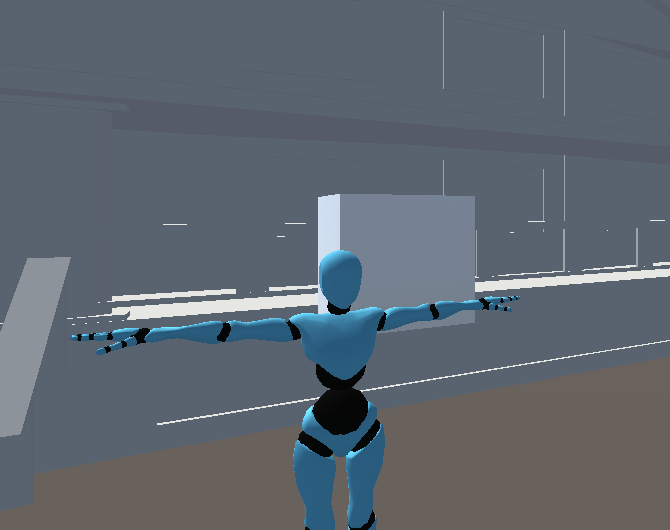

| “When using the correct technique for passing a first-floor window, the soldier stays below the window level and near the side of the building. He makes sure he does not silhouette himself in the window. An enemy gunner inside the building would have to expose himself to covering fires if he tried to engage the soldier.” | |

Scene created by hand in Unity. The cube represents the window and the humandoid represents a mark point. Scene created by hand in Unity. The cube represents the window and the humandoid represents a mark point. |

Scene created automatically from Natural Language input. The cube represents a window on the front of a house and the humanoid a mark point. Scene created automatically from Natural Language input. The cube represents a window on the front of a house and the humanoid a mark point. |

|

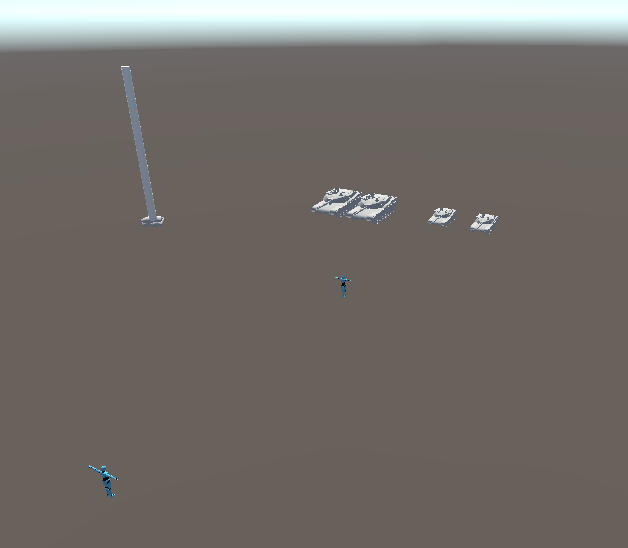

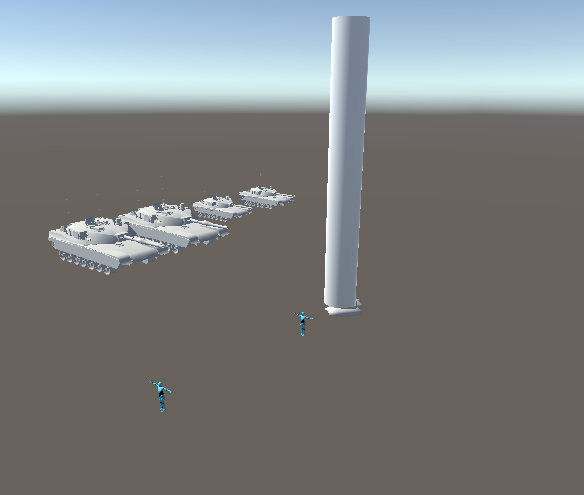

“The battalion plan of action was as

follows: one platoon of Company "F," with a light machine gun

section, would stage the initial diversionary attack. It would

be supported by two tanks and two tank destroyers, who were

instructed to shoot at all or any suspected targets. Observation

posts had been manned on a slag pile to support the advance with

81-mm mortar fire...The platoon action was to be the first

step...to reduce the town of Aachen.” |

|

Scene created by hand in Unity. Platoon and targets are represented by humanoids. The obseravation post is represented by a tall cylinder. The slag pile is represented by a pile of sandbags. Scene created by hand in Unity. Platoon and targets are represented by humanoids. The obseravation post is represented by a tall cylinder. The slag pile is represented by a pile of sandbags. |

Scene created automatically from Natural Language input. Platoon and targets are represented by humanoids. The obseravation post is represented by a tall cylinder. The slag pile is represented by a pile of sandbags. Scene created automatically from Natural Language input. Platoon and targets are represented by humanoids. The obseravation post is represented by a tall cylinder. The slag pile is represented by a pile of sandbags. |

| "There is a plate on the table. In front of the plate is a

candle, and in front of the candle is a bowl. There is a spoon

to the right of the bowl. A chair is in front of the bowl. There

is an apple on the plate. To the left of the plate is a fork, and to the right of the plate is a knife.

Behind the table is a throne. John picks up the apple and

eats the apple." |

|

Scene created automatically from Natural Language. Scene created automatically from Natural Language. |

|