Abstract

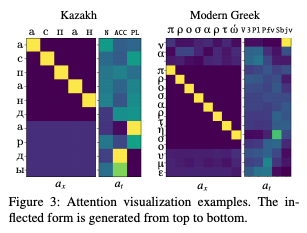

Recent years have seen exceptional strides in the task of automatic morphological inflection generation. However, for a long tail of languages the necessary resources are hard to come by, and state-of-the-art neural methods that work well under higher resource settings perform poorly in the face of a paucity of data. In response, we propose a battery of improvements that greatly improve performance under such low-resource conditions. First, we present a novel two-step attention architecture for the inflection decoder. In addition, we investigate the effects of cross-lingual transfer from single and multiple languages, as well as monolingual data hallucination. The macro-averaged accuracy of our models outperforms the state-of-the-art by 15 percentage points. Also, we identify the crucial factors for success with cross-lingual transfer for morphological inflection: typological similarity and a common representation across languages.