Paper: A Spatial EA

Framework for Parallelizing Machine Learning Methods

Abstract: The scalability of machine

learning (ML) algorithms has become increasingly important due to

the ever increasing size of datasets and increasing complexity of

the models induced. Standard approaches for dealing with this issue

generally involve developing parallel and distributed versions of

the ML algorithms and/or reducing the dataset sizes via sampling

techniques. In this paper we describe an alternative approach that

combines features of spatially-structured evolutionary algorithms

(SSEAs) with the well-known machine learning techniques of ensemble

learning and boosting. The result is a powerful and robust framework

for parallelizing ML methods in a way that does not require changes

to the ML methods. We fi�rst describe the framework and illustrate

its behavior on a simple synthetic problem, and then evaluate its

scalability and robustness using several diff�rent ML methods on a

set of benchmark problems from the UC Irvine ML database.

Source Code for All Experiments

Many of the WEKA algorithms have bugs when run with multi-threaded

code (IBK, nearest neighbor code for example), hence we

implemented two different packages. One, complete single-threaded

WEKA classifier, that can work with explorer and experimenter in

generic way. Only thing is while running the experiments, seed

have to be manually changed. The parallel algorithm uses the "time

in milliseconds" as the large seed for getting the validation set

from the training set. Having a large seed is important for

getting different sample of the data to train and validate when

running multiple runs of the algorithm.

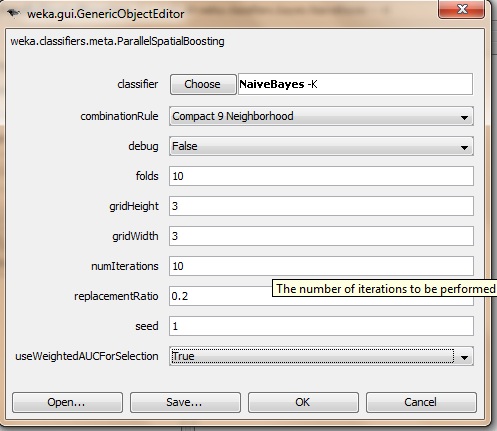

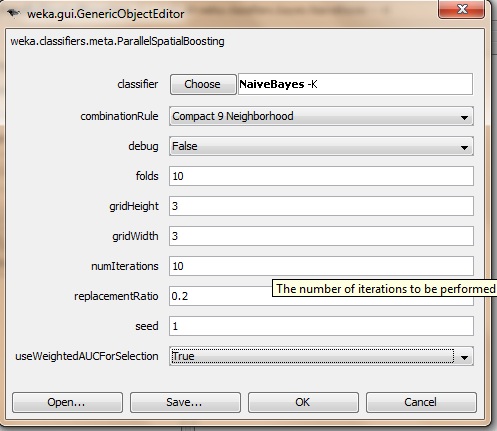

Parameters when running through WEKA (note the

useWeightedAUCForSelection which uses the validation set to get AUC

instead of just % correct is used)

- Parallel Multi-threaded Implementations