Gesture Recognition and Human-Computer Interaction

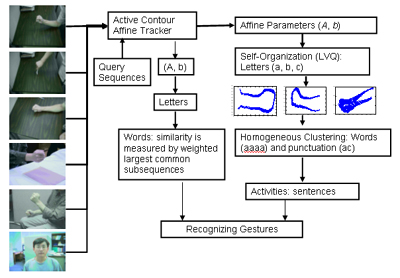

Many gestures can be represented by the motion of human body. This research work presents a framework to get the motion trajectories of human body (arm and hand, head, and shoulder) -- the gestures, and recognize them using a linguistic approach. The trajectory is represented by 6D Affine parameters and obtained by active contour tracker. Learning Vector Quantization abstracts the affine motion parameters as atomic motion, "LETTERS", -- signal to symbolic mappings. Clustering derives sequence of letters as "WORDS" -- actions and the transitions. The combinations of words derive the activities -- "SENTENCES". The activities are recognized through a weighted largest common subsequence (LCS) matching. Our experiments demonstrate that the framework is effective, efficient and robust. It significantly provides a intelligent tool for human computer interaction from perception.

|

|

|

Framework Diagram Sample Video Sequences