Feature Selection and Vision Based Location Recognition

This research work presents a probabilistic environment model which facilitates global localization scheme by means of location recognition from visual sensing. It is central to basic mobile robot navigation and map building tasks. In the exploration stage the environment is partitioned into several locations, each represented by a set of representative views with scale-invariant features. Each feature is encoded by the SIFT descriptors, which can be robustly matched between query and model views despite the changes in contrast, scale and affine distortions, and partial occlusion. In order to obtain a compact environment model, we study the feature selection problem to select the informative features with high discrimination capability using Parzen density estimation. We further employ the strangeness measurement to select the most informative features in query view, and estimate the probability of most likely location given the query image. The ambiguities due to the self-similarity and dynamic changes in the environment are resolved by exploiting spatial relationships between locations captured by Hidden Markov Model. Our experiments demonstrate that the feature selection procedures in both training and testing stages are effective and efficient for part-based object / location recognition, and the whole strategy is efficient and robust for mobile robot local and global localization. It significantly broadens the scope of the existing part-based object / location representation, and mobile robot navigation techniques from visual sensing.

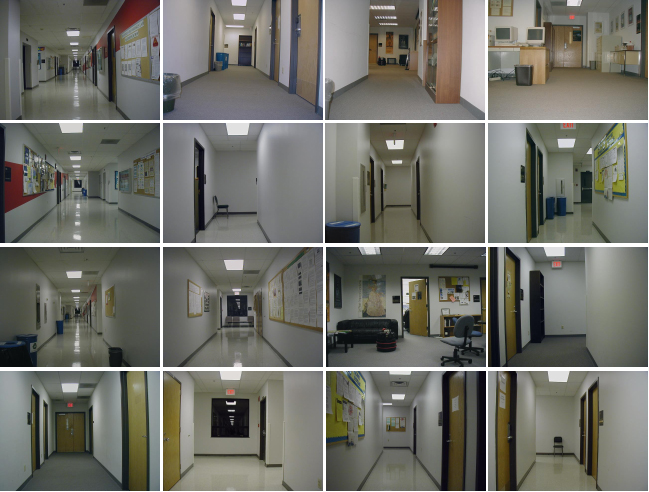

Representative views of 12 out of 18 locations Detected Features (each is local region) Selected Discriminative Features

The top selected discriminative features for each location

Location 1 Location 2

Location 7 Location 8

Recognition Results Using Maximal Likelihood and HMM to explore the Spatial Relationship, respectively.

Test 1 with maximal likelihood (85.8%) Test 1 with HMM (95.5%)

Test 2 with maximal likelihood (80.8%) Test 2 with HMM (95.4%)

Experience Data Set: Training Sequence and Test 1 and Test 2 Sequences [zip files].

Detail information and paper available upon request: fli1@gmu.edu or fli@cs.gmu.edu.

Acknowledgement: This work was supported by NSF grant IIS - 0347774.