Generating high-quality explanations for navigation in partially-revealed environments

Imagine you’re in an unfamiliar university building and wish to navigate to an unseen goal that you know is 100 meters away in a leftward direction; how do you get there in minimum expected time?

Chances are, you follow the hallway. The classroom is likely a dead end, and so entering it in an effort to reach the goal is unlikely to prove fruitful, since you’ll probably have to turn back around. But what happens if a robot, given the same task, behaves in a way that’s unexpected and instead enters the classroom? You might want to understand why the agent behaved the way it did. It should explain to you its decision so that you could interpret it’s decision-making process and, if desired, use that understanding correct bad behavior like this.

In our work, we develop a procedure that allows the agent to generate a counterfactual explanation of its behavior. I might wish to ask the robot Why did you not follow the hallway? and have it meaningfully respond with something like this:

I would have followed the hallway if I instead believed that the classroom were significantly less likely to lead to the goal (10%, down from 90%); and believed that the hallway were slightly more likely to lead to the goal (95%, up from 75%).

This explanation, provided automatically by the agent, reveals much about its internal decision-making process, including that it’s initial belief associated a far-too-high likelihood that the classroom would lead to the unseen goal. This explanation indicates that we might want to change the robots predictive model, since its initial predictions led it astray and would have resulted in poor performance, and perscribes how we might do that to get the behavior we seek.

But how can we design an agent that’s capable of generating such an explanation? How can we ensure that the explanations are any good? And how can we use the explanations to both identify and correct bad behavior? In the post below, we discuss our recent NeurIPS 2021 paper Generating High-Quality Explanations for Navigation in Partially-Revealed Environments which seeks to provide answers to these questions. This post is lengthy (at 4,000 words, it is about a 15 minute read) and is geared towards a somewhat technically-minded audience. However, I have tried to provide enough context that I expect it will also be of interest to non-roboticists. There is also a 13 minute video presentation that has significant overlap with this post that you may enjoy instead:

The challenge of explainable planning under uncertainty

When only part of the world is known to the robot, it must understand how unseen space is structured so that it can reason about the goodness of trajectories that leave known space. For instance (and as seen above), whether the agent enters the classroom may depend on how likely it is that the classroom leads to the goal. I’ve written before about how difficult planning under uncertainty can be, making even seemingly simple predictions about the future is fraught with challenges, since it can be difficult to predict what lies just around the corner, much less one-hundred meters away.

Many state-of-the-art approaches to planning under uncertainty rely on machine learning to help make good decisions about where to go or what to do [Wayne et al, 2018 (MERLIN)]. However, most of these are so-called model-free planning strategies trained via deep reinforcement learning and are both known to be somewhat unreliable and not well-suited to explaining their decision-making process. Indeed there are tools that exist to help explain the predictions of machine learning systems [Chen et al, 2019, Sundararajan et al, 2017], including those that rely on convolutional neural networks to make predictions from images collected onboard the robot. Yet these tools are typically designed to explain the predictions from single sensor observations or images, and are not well-suited to explain long-horizon planning decisions, which must consider many observations simultaneously.

If we are to welcome robots into our homes and trust them to make decisions on their own, they should perform well despite uncertainty and be able to clearly explain both what they are doing and why they are doing it in terms that both expert and non-expert users can understand. As learning (particularly deep learning) is increasingly used to inform decisions, it is both a practical and an ethical imperative that we ensure that data-driven systems are easy to inspect and audit both during development and after deployment.

Designing an agent that both achieves state-of-the-art performance and can meaningfully explain its actions has so far proven out of reach for existing approaches to long-horizon planning under uncertainty.

In our paper, we sought an alternate representation for learning-informed planning that will allow for both good performance and explainable decision-making.

Criterion for interpretable-by-design navigation under uncertainty

While it may be clear at this point that we want some new way of representing the world—so that we can allow an agent to think far into the future and explain to us its thought process—it’s not yet clear what that new representation will look like. As mentioned above, so-called model-free approaches won’t cut it; these approaches are characterized by their lack of an ability to explicitly reason about the future, choosing instead to try to directly learn the relationship between input sensor observations and good behavior. The lack of a model means that it is difficult for them to communicate to humans, who seem to naturally tend to plan in terms of how their actions will impact the future. Instead, the robot must be able to think more as humans seem to when planning under uncertainty: explicitly thinking about unseen space and the potential impact of their actions.

A number of recent works consider what is implied by interpretability (a prerequisite for generating explanations) and propose taxonomies and criteria by which we understand how interpretable a model for planning is. In our paper, we propose the following three criteria for evaluating the interpretability of a model that relies on learning to plan under uncertainty, motivated by those of Zach Lipton [Lipton, 2018, paper link]:

Some good papers that discuss the taxonomies of interpretability and explainability of machine learning systems include [Rudin, 2018, Lipton, 2018, Gilpin et al, 2018, Suresh et al, 2021].

Some good papers that discuss the taxonomies of interpretability and explainability of machine learning systems include [Rudin, 2018, Lipton, 2018, Gilpin et al, 2018, Suresh et al, 2021].

See our paper for details on why these criteria were chosen as well as some more detail about their implications.

See our paper for details on why these criteria were chosen as well as some more detail about their implications.

1Decisions available to the agent should reflect how a human might describe their own decision-making given the same information.2High-level planning should rely on a model and explicitly consider the impact of the agent’s actions into the future.3The impact of actions, particularly those that enter unknown space, should be expressible via a small number of human-understandable quantities.

Subgoal-based planning

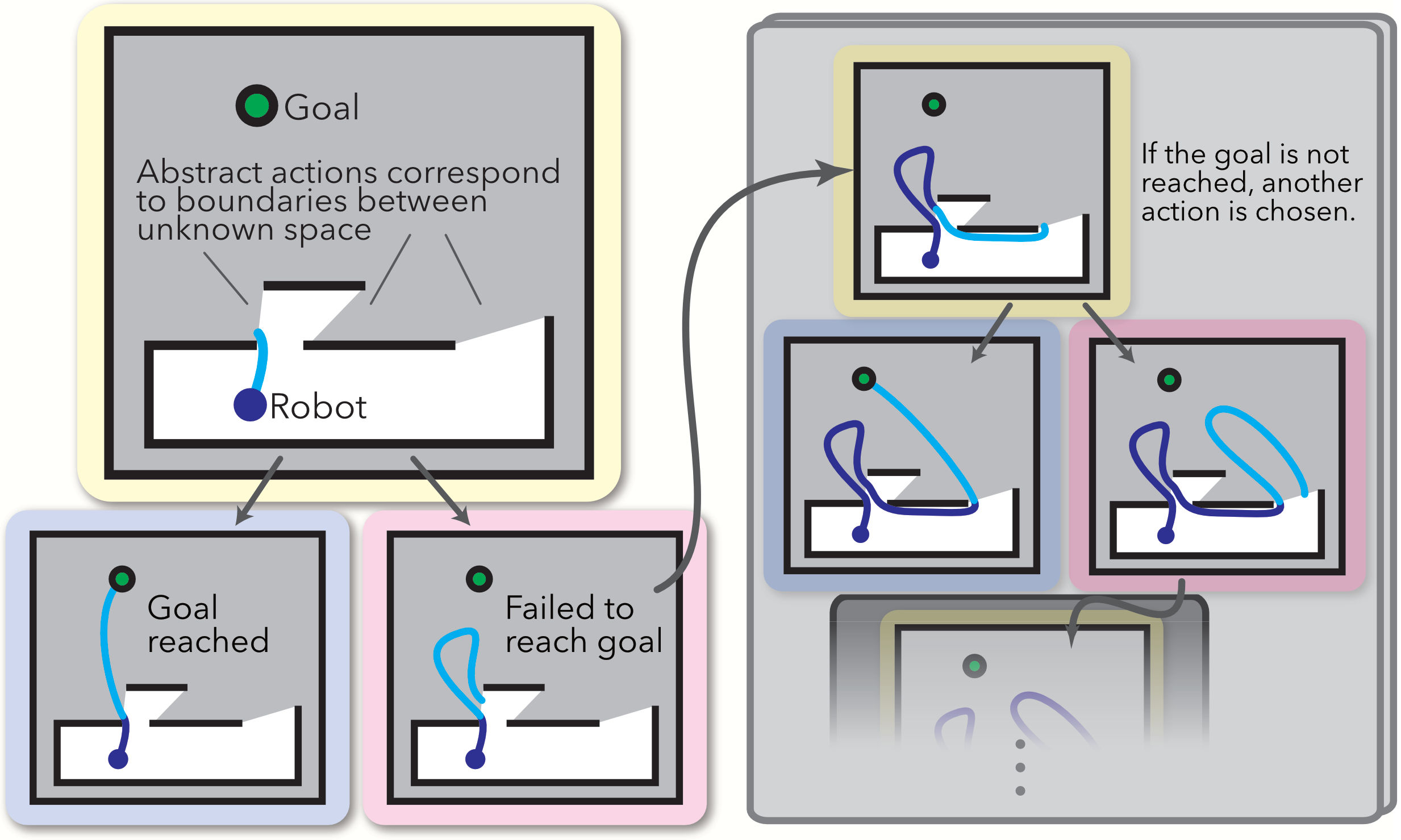

In our previous work, we presented a representation that happens to satisfy these criteria and it is a key insight of our paper that our previous Learning over Subgoals planning abstraction is well-suited for explanation generation [Stein, Bradley, and Roy, 2018]. Under this model the robot must select between “subgoals.” Each subgoal corresponds to regions of unseen space the agent can explore to try to reach the goal, like the classroom or hallway in our earlier example. Since the subgoals define the agent’s actions, the robot’s policy is the order in which it will explore the space beyond the subgoals. In our example, the agent’s policy is to first explore the classroom and then, if it fails to reach the goal, turn back and continue down the hallway. Finally, we use machine learning to estimate properties associated with exploring beyond a subgoal, including the likelihood the subgoal will lead to the goal.

Given estimates of the subgoal properties, we can compute the expected cost of a policy. The Learning over Subgoals model defines expected cost, which consists of the expected cost of the first subgoal-action, plus the weighted expected cost of the next, and so on. The goodness of a plan under this model is interpretable and so we can use the interpretable subgoal properties to understand why the robot might prefer one plan over another. On a toy example, the agent’s policy looks something like this:

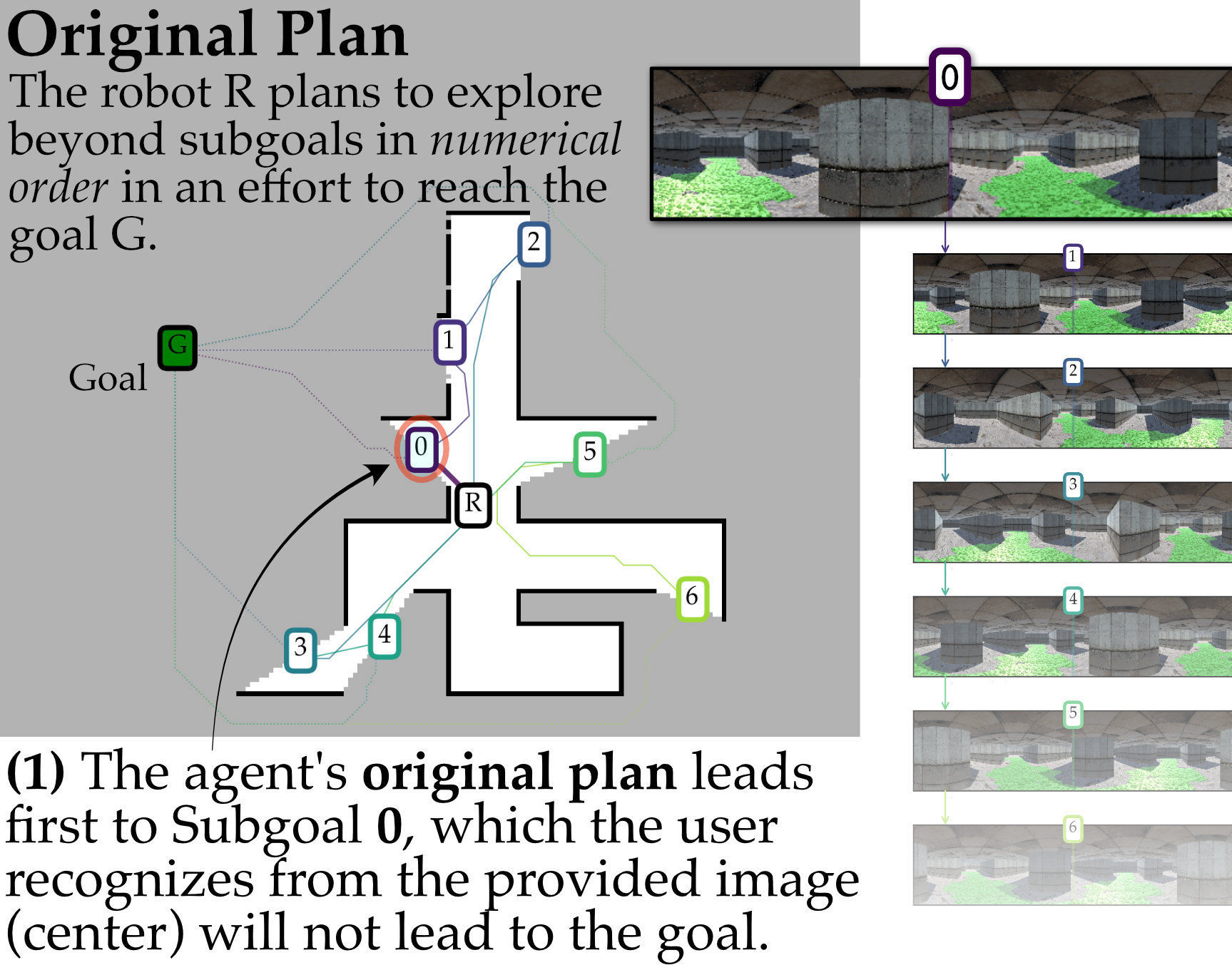

Given estimates of the subgoal properties, we can compute the expected cost of a policy. The Learning over Subgoals model defines expected cost, which consists of the expected cost of the first subgoal-action, plus the weighted expected cost of the next, and so on. The goodness of a plan under this model is interpretable and so we can use the interpretable subgoal properties to understand why the robot might prefer one plan over another. Here’s the robot’s plan over time in our guided maze simulated environment:

The map on the left shows the top-down view of the agent’s map with the three subgoals annotated in the order in which the agent aims to explore them in an effort to reach the goal. Its policy is determined by its estimates of the subgoal properties derived from its onboard panoramic camera. As navigation proceeds, the agent’s map is updated and planning is re-run at every time step until the goal is discovered.

Generating counterfactual explanations of high-level behavior

Let’s look at a toy example similar to our hallway-classroom scenario above. Here the agent finds itself in the Guided Maze environment shown above, in which the illuminated green tiles indicate the most likely (by far) route to reach the unseen goal. Yet in this scenario, the agent mistakenly believes it should first explore Subgoal 0. The agent incorrectly predicts that Subgoal 0 is likely to lead to the goal, yet the green path leads elsewhere. Instead, a knowledgeable agent should know to first pursue Subgoal 1 to reach the goal most quickly. This behavior is poor and so we seek to understand it.

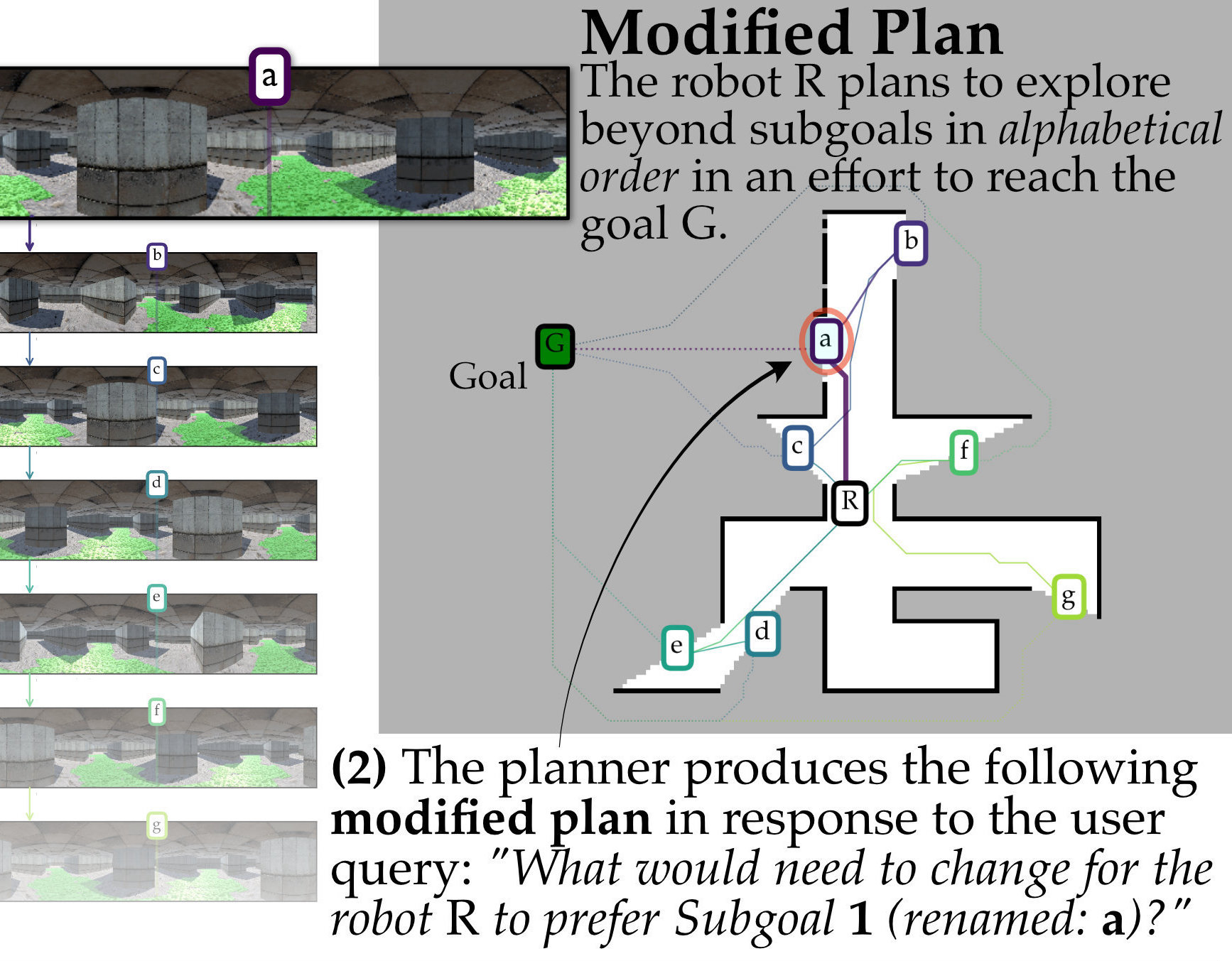

As mentioned above, the Learning over Subgoals planning abstraction gives us a way to relate predictions about unseen space made by the agent and the expected cost of the agent’s behavior. In this case, the agent has chosen to first explore beyond Subgoal 0 because based on its (incorrect) estimates about unseen space, it would be higher expected cost to explore Subgoal 1 first instead. If we are to change the agent’s behavior, we need to change its mind on this point. We compare the expected cost associated with the two plans—one in which Subgoal 0 is explored first and the other in which Subgoal 1 is explored first—and update the agent’s learned model until the behavior changes. Because the Learning over Subgoals model is differentiable (once the policy is fixed), we use gradient descent to compute this change, which yields the smallest possible change to the learned model that gives rise to this desired change in behavior. The changes in the subgoal properties as a result of this process form the backbone of our explanation; we use a simple grammar to turn those changes from numbers into prose.

The learned model estimates the subgoal properties from images the robot collected during travel. Backpropagation therefore includes the networks used to estimate these properties. We use use a neural net to estimate the properties from images, use those properties to estimate expected cost, and then compare the two policies: this entire process is differentiated for gradient descent.

The learned model estimates the subgoal properties from images the robot collected during travel. Backpropagation therefore includes the networks used to estimate these properties. We use use a neural net to estimate the properties from images, use those properties to estimate expected cost, and then compare the two policies: this entire process is differentiated for gradient descent.

For our scenario above, we ask the robot Why did you not first travel to Subgoal 1? It automatically generates the following counterfactual explanation:

We could also generate an explanation for good behavior. A robot that followed the hallway might point out that it would have entered the classroom if the likelihood of the class leading to the goal were significantly higher. In this case, the explanation would be reassurance that the agent’s predictions are already pretty good and that we should continue to trust it.

We could also generate an explanation for good behavior. A robot that followed the hallway might point out that it would have entered the classroom if the likelihood of the class leading to the goal were significantly higher. In this case, the explanation would be reassurance that the agent’s predictions are already pretty good and that we should continue to trust it.

I would have preferred Subgoal 1 if I instead believed: Subgoal 0 were significantly less likely to lead to the goal (36%, down from 98%) and that the cost of getting to the goal through Subgoal 0 were slightly increased (6.0 m up from 5.7 m) and believed that; Subgoal 1 were slightly more likely to lead to the goal (94%, up from 86%) and that the cost of getting to the goal through Subgoal 1 were slightly decreased (5.8 m, down from 6.0 m).

Our explanations also have a visual component that allow us to see the changes to the agent’s plan proposed by the explanation:

You may also notice that our explanations only contain a handful of subgoal properties as opposed to all the changes that occur as the learned model is updated during explanation generation. Our paper includes additional discussion of how we limit the number of subgoal properties we report without sacrificing the accuracy of the explanations.

Training-by-explaining: validating that the explanations are high-quality

Because gradient descent is used to compute the explanations from the model used for planning, explanations are guaranteed to be accurate, but how can we determine how useful the explanations are? A high-quality explanation is one that is both accurate and sufficiently rich with information that it can be used to correct bad behavior. In short: how can we ensure that the explanations are good enough to correct poor behavior in general?

To address this question we must discuss how our agent is trained: i.e., where it’s data comes from and how it uses that data to tune the parameters of its learned model. For those familiar with how neural networks are trained, you may already have guessed at an answer—many modern machine learning approaches are trained via gradient descent and the model parameters are tuned until some target output is reached. Our training process is designed so that it mirrors our explanation process. The agent’s explanation generation procedure involves using gradient descent to update the agent’s learned model until a desired change in behavior occurs. Similarly, when training, we use gradient descent to update the agent’s model. The agent is trained by iterating over a corpus of decision-points until its behavior matches that of an oracle. As such, a single training iteration can be thought of as taking one step towards generating an explanation.

The inverse is also true: generating an explanation is like training the agent on a single training instance until its behavior changes.

The inverse is also true: generating an explanation is like training the agent on a single training instance until its behavior changes.

Why does this matter? Because the agreement between our training process and our explanations connects performance metrics associated with the each; our training-by-explaining procedure links the quality of our explanations and test-time performance. If the agent performs well, it implies that, in aggregate, explanations are sufficiently information-rich that they can be used to train the agent from scratch and correct behavior. Our agent outperforms both a learned and a non-learned baseline planner in two simulated environments (thousands of trials in both the guided maze environment and a significantly-more-complicated University Buildings environment). We achieve state-of-the-art performance in this challenging domain, a confirmation that our agent’s explanations are high-quality.

Explainable Interventions: correcting behavior via explanation generation

Our training-by-explaining procedure shows that the explanations are in the aggregate rich with information, but what about individual explanations? In this final set of experiments, we sought to understand how we might be able to rely on a single explanation to help us correct bad behavior, intervening when we decide it’s necessary.

These explainable interventions are made possible by our explanation generation process. Explanations describe how to change the agent’s behavior as computed via our gradient descent procedure. When we intervene to correct bad behavior, we accept the change to the estimated subgoal properties (as computed for and described by the explanation) and use the newly-updated model to plan.

During an intervention, (1) we first identify bad behavior, then (2) generate an explanation of that behavior; (3) a human can then inspect the explanations and, if desired, they can then (4) change the agent’s behavior in a way that is consistent with the explanation.

Our intervention process can be thought of as a type of imitation learning, in which the agent is being retrained online to match the behavior of an oracle at a single point. Our model and procedure make this process explainable, something not possible with existing approaches that use learning to plan under uncertainty.

Our intervention process can be thought of as a type of imitation learning, in which the agent is being retrained online to match the behavior of an oracle at a single point. Our model and procedure make this process explainable, something not possible with existing approaches that use learning to plan under uncertainty.

Human-in-the-loop trials will be a subject of future work. For now, we focus on demonstrating the ability of our explanations to facilitate this change if desired, a measure of the quality of the explanations.

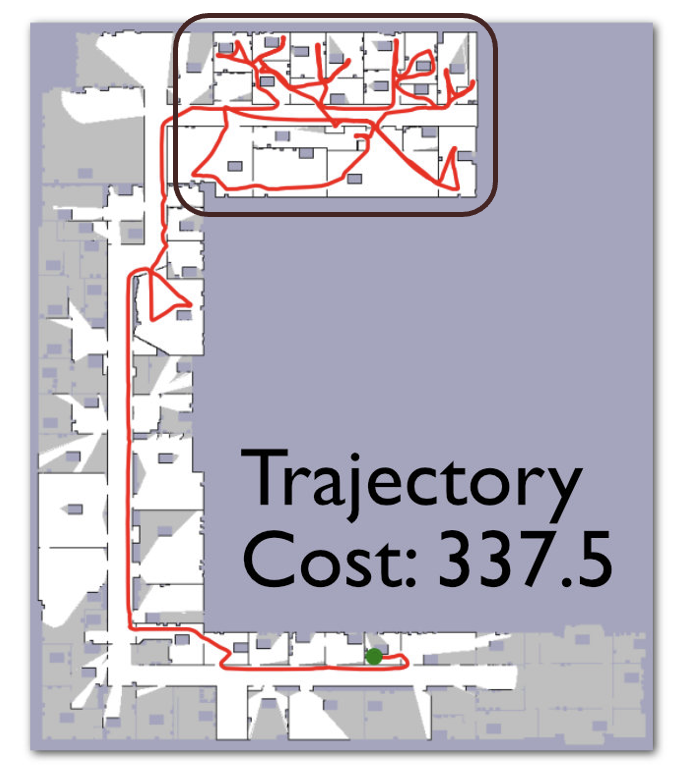

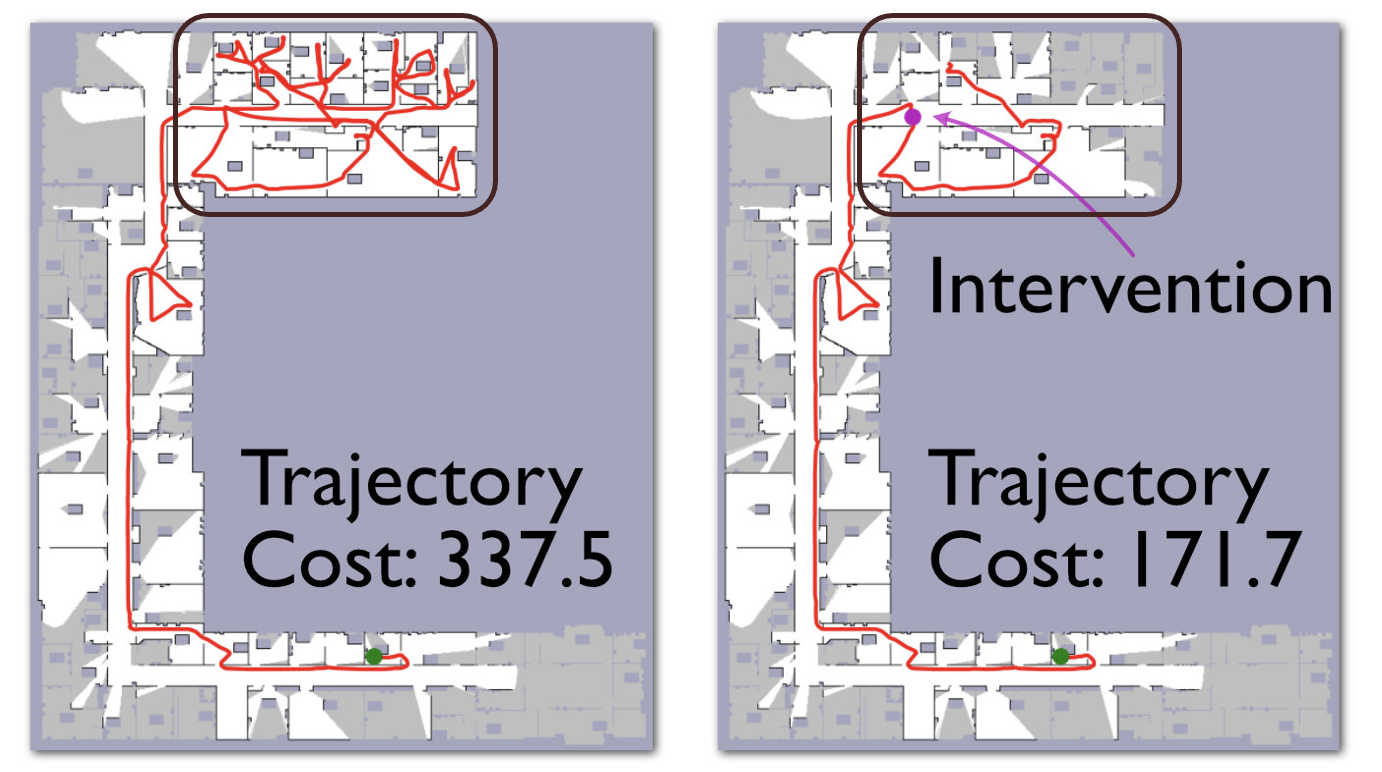

The figure below includes an example from our University Buildings environment. Without intervention, our agent performs poorly and explores many of the classrooms and offices in the upper part of the map before realizing it should follow the hallway. Looking at the agent’s plan at a key decision-point, we can see that the agent turns back to explore the classrooms, rather than continue to follow the hallway:

We intervene at this decision point by generating a counterfactual explanation and using the updated learned model to plan. After intervening, at the purple location highlighted in the right plot, the robot continues down the hallway, avoiding the poor behavior exhibited before intervention. The agent plans with the newly-updated model until it reaches the goal, performing well and demonstrating that our interventions do not problematically degrade performance elsewhere:

We identify 50 poor-performing trials in our University Building environments and intervene once in each. Across these trials, we improve overall performance by over 25%. Our results show the utility of our explanations in improving performance: showing that we can both correct bad behavior and do so in a way that is consistent with explanation generation.

Limitations and Future Work

If this approach is to be adopted more broadly and to enable a new generation of trustworthy and capable autonomous agents, there are still a few limitations that will need to be better understood and addressed. The learning over subgoals model upon which this approach is built makes some strong assumptions about planning that are untrue in general, including the idea that exploring beyond a region will fully reveal that part of the environment and that the environment is simply connected: that it contains no loops. In environments performance and explanation quality may be prohibitively poor. Getting good planning performance may also require that the agent cheat and estimate (for example) artificially-high likelihoods to bias its behavior against missing potential routes to the goal. The conflicting objectives of high-accuracy estimation versus predicting properties that optimize performance has potentially problematic societal implications; our system’s estimates may mislead the user if they differ from their implied interpretable meaning.

We include significant detail about the limitations of our approach in the final section of the paper.

We include significant detail about the limitations of our approach in the final section of the paper.

Overcoming this limitation is tricky, because (to the best of our knowledge) our representation for planning under uncertainty is the only one that is well-suited to this task. To make more advances in this space, novel representations may be required that abstract knowledge about unseen space yet in a way that does not make such strong assumptions about the nature of the environment. Perhaps hierarchal learning will help make progress in this space, and indeed some hierarchal reinforcement learning approaches that learn skills or options have potential in this regard. Problematically, abstracting away more knowledge at the “highest level” of planning may obfuscate details about the relationship between perception and those models. Our approach, too, suffers from this limitation: while our work elucidates the relationship between the subgoal properties (each a prediction about unseen space) and the agent’s decisions, the relationship between low-level perception (images) and these estimated properties remains somewhat opaque. It is additionally possible to leverage existing tools inspecting feed-forward neural networks to better understand this relationship—something we briefly explore in Appendix 4 of our Supplementary material—but remains difficult to understand and will be the subject of future work. Our future work will additionally involve conducting human-in-the-loop trials to better understand how effectively a human can make use of the explanations to understand and audit robot behavior.

Conclusion & References

We have shown how the Learning over Subgoals planning abstraction can be used to generate high-quality explanations for long-horizon planning decision in partially-revealed environments and allows for state-of-the-art performance. Our training-by-explaining strategy links test-time performance the goodness of our explanations, providing an objective measure of explanation quality. Finally, our approach enables explainable interventions, whereby a single explanation is used to correct poor behavior, showing that the explanations are sufficiently rich with information that they can be used to improve performance.

Read our full paper here or feel free to explore our code on GitHub.

- Gregory J. Stein, Generating High-Quality Explanations for Navigation in Partially-Revealed Environments, in: Advances in Neural Information Processing Systems (NeurIPS), 2021.

- Chaofan Chen, Oscar Li, Alina Barnett, Jonathan Su & Cynthia Rudin, This Looks Like That: Deep Learning for Interpretable Image Recognition, in: Neural Information Processing Systems (NeurIPS), 2019.

- Mukund Sundararajan, Ankur Taly & Qiqi Yan, Axiomatic Attribution for Deep Networks, in: International Conference on Machine Learning (ICML), 2017.

- Cynthia Rudin, Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead, Nature Machine Intelligence, 2019.

- Zachary C Lipton, The Mythos of Model Interpretability, Queue, 2018.

- Leilani H. Gilpin, David Bau, Ben Z. Yuan, Ayesha Bajwa, Michael Specter & Lalana Kagal, Explaining Explanations: An Overview of Interpretability of Machine Learning, in: International Conference on Data Science and Advanced Analytics (DSAA), 2018.

- Harini Suresh, Steven R. Gomez, Kevin K. Nam & Arvind Satyanarayan, Beyond Expertise and Roles: A Framework to Characterize the Stakeholders of Interpretable Machine Learning and their Needs, in: CHI Conference on Human Factors in Computing Systems, 2021.

- Greg Wayne, Chia-Chun Hung, David Amos, Mehdi Mirza, Arun Ahuja, Agnieszka Grabska-Barwinska, Jack W. Rae, Piotr Mirowski, Joel Z. Leibo, Adam Santoro, Mevlana Gemici, Malcolm Reynolds, Tim Harley, Josh Abramson, Shakir Mohamed, Danilo Jimenez Rezende, David Saxton, Adam Cain, Chloe Hillier, David Silver, Koray Kavukcuoglu, Matthew Botvinick, Demis Hassabis & Timothy P. Lillicrap, Unsupervised Predictive Memory in a Goal-Directed Agent, arXiv preprint arXiv:1803.10760, 2018.