Jan M. Allbeck, Ph.D.

| I've had the opportunity to work on many research projects to varying extents. Below is an archive of some of those projects. More recent work can be found on the Games And Intelligent Animation website. |

|

|

CAROSA: Crowds with Aleatoric, Reactive, Opportunistic, and Scheduled Actions (2006-present) Most crowd simulation research either focuses on navigating characters through an environment while avoiding collisions or on simulating very large crowds. This work focuses on creating populations that inhabit a space as opposed to passing through it. Characters exhibit behaviors that are typical for their setting. We term these populations functional crowds. A key element of this work is ensuring that the simulations are easy to create and modify. Roles and groups help specify behaviors, a parameterized representation adds the semantics of actions and objects, and four types of actions (i.e. scheduled, reactive, opportunistic, and aleatoric) ensure rich, emergent behaviors. |

|

MURI: SUBTLE: Situation Understanding Bot Through Language and Environment (2007-present) For effective human-bot communication to be possible, we must move from robust sentence processing to robust utterance understanding. Our bots must be able to decode not only what sentence the speaker used, but also what the speaker’s intentions were when he spoke. This task takes us far beyond a precise specification of the set of literal meanings of individual sentences in isolation. It pushes us well past current text processing methods. It demands that we achieve a robust and tractable computational understanding of both implicit and explicit linguistic meaning. |

|

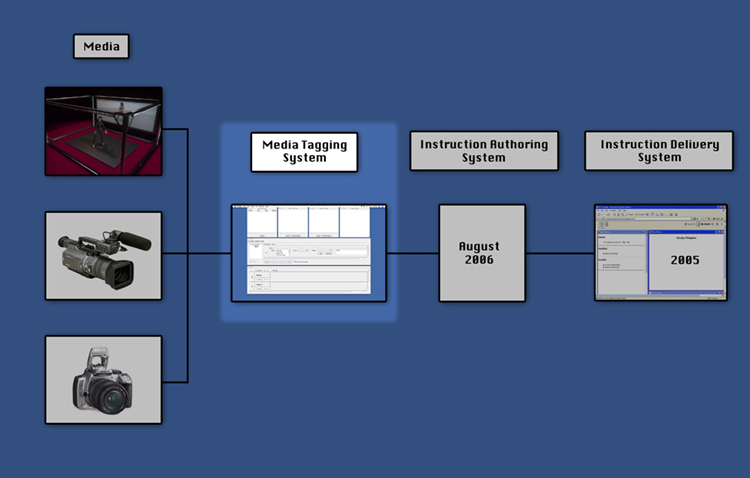

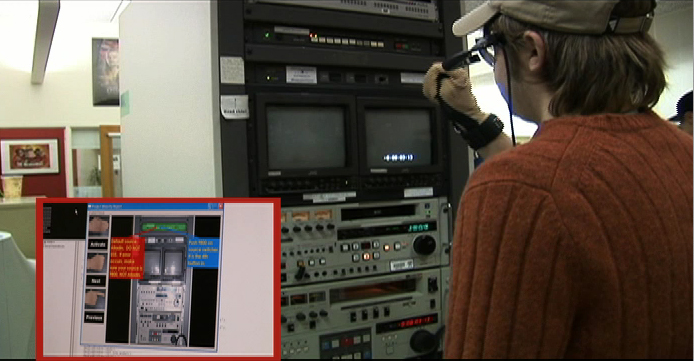

Air Force: AVIS-MS: Advanced Visual and Instruction Systems for Maintenance Support (2005-2007) Complexity, customization, and packaging of military platforms and systems increase maintenance difficulty at the same time as the available pool of skilled technical personnel may be shrinking. In this environment maintenance training, technical order presentation, and flight-line operational practice may need to adopt “just-in-time” procedural aids. Moreover, the realities of real-world maintenance may not permit the hardware indulgences and rigid controls of laboratory settings for visualization and training systems, and at the same time the actual activities of maintainers will challenge requirements for portable or wearable devices. This project investigates technologies that maybe used in the maintenance of Air Force equipment. |

|

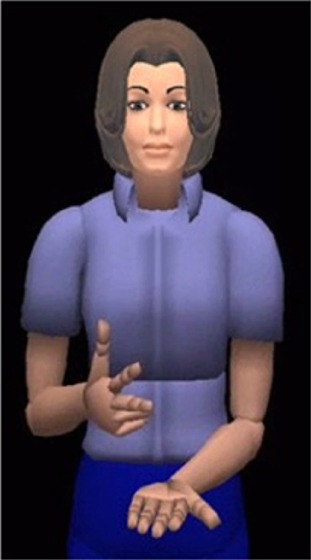

NSF: American Sign Language Natural Language Generation and Machine Translation (2005-2006) The goal of this project was to develop new technologies that enable the machine translation of English text into animations of American Sign Language. This research will make more information and services available to the majority of Deaf Americans who face English literacy challenges. Because signed languages, like ASL, contain phenomena not seen in traditional written/spoken languages, they are particularly challenging to process using standard MT approaches. Exploring the computational linguistics of ASL can help us understand the limitations of current MT technologies and motivate the development of new ones. |

|

NASA: RIVET: Rapid Interactive Visualization for Extensible Training (2004-2007) The new NASA mandate calls for missions of unprecedented remoteness and duration. Challenges include high system complexity, and low training time and tolerance for error. Human capabilities remain relatively fixed and current training and instruction tools are inadequate. OUR MANDATE: To provide computer based integrated training and instruction tools that are visually intuitive and adaptable to user skill level and context. |

|

ONR: VIRTE : Virtual Technologies and Environments (2004-2005) The Virtual Technologies and Environments (VIRTE) project from the Office of Naval Research is developing a virtual reality based training system for Marine Corps fire teams in close quarters battle (CQB). HMS has the task of creating smarter, real-time, reactive computer generated forces (CGFs). HMS worked on this project by investigating extending the capabilities of the underlying game engine, Gamebryo, by adding inverse kinematics. Other areas of focus include synthetic vision and attention models, which will allow smart agents with specific cognitive tasks and internal states to generate appropriate eye, face, head, and body behaviors in dynamically changing environments. |

|

(2002-2005) Highly controllable virtual humans are essential for simulations that involve interactions with highly detailed virtual environments. These virtual environments could represent a power plant, an airport, a ship, or a factory. In most cases these virtual environments are created from CAD systems and represent conceptual or detailed designs. It is desired that these simulations include real world operations and activities for the virtual humans. This will ensure the design is safer, more useful, maintainable, and more comfortable for the targeted user population. |

|

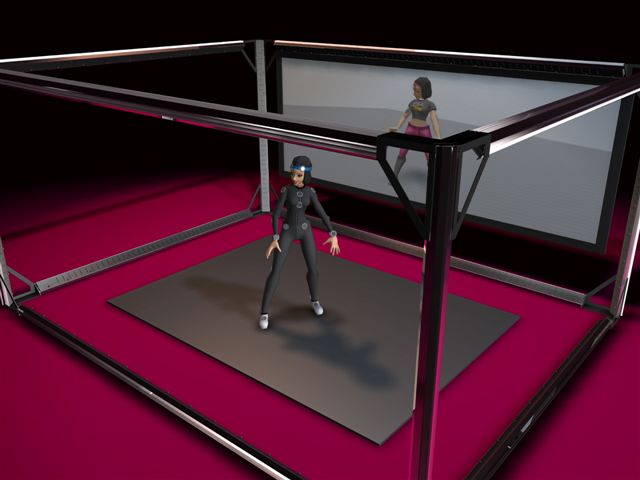

(2002) LiveActor combines an optical motion capture system with a stereo 3-D projection environment that allows users to experience a truly immersive virtual reality environment. The combination of these systems provides functionality beyond their individual, traditional uses. For example, motion capture is traditionally used for animation, game development, and human performance analysis, but with LiveActor, users will be able to directly interact with characters embedded within virtual worlds. Traditional VR experiences provided through CAVEs(TM) or head mounted displays usually offer limited simulations and interactions (i.e. head tracking and wand tracking), but the LiveActor system will allow for whole body tracking. This allows for a more realistic experience and more data for analysis. |

|

NASA: Crew Task Simulation for Maintenance, Training, & Safety (2000-2003) Ambitious space missions will present new challenges for space human factors as crews interact with and maintain complex equipment and automation. This demands that procedures for task design, rehearsal, and procedure refreshing be based on on-ground validated procedure instructions. We wish to investigate requirements for formulating, interpreting, and validating procedure instructions. Crew instructions should express clearly and unambiguously complex actions and their expected results. |

|

Amplifying Control and Understanding of Multiple ENitites (ACUMEN) (2001-2002) In virtual environments, the control of numerous entities in multiple dimensions can be difficult and tedious. In this project, we present a system for synthesizing and recognizing aggregate movements in a virtual environment with a high-level natural language) interface. The principal components include: an interactive interface for aggregate control based on a collection of parameters extending an existing movement quality model, a feature analysis of aggregate motion verbs, recognizers to detect occurrences of features in a collection of simulated entities, and a clustering algorithm that determines subgroups. Results based on simulations and a sample instruction application are shown. |

|

Pedestrians: Creating Agent Behaviors through Statistical Analysis of Observation Data (2000) Creating a complex virtual environment with human inhabitants that behave as we would expect real humans to behave is a difficult and time consuming task. Time must be spent to construct the environment, to create human figures, to create animations for the agents’ actions, and to create controls for the agents’ behaviors, such as scripts, plans, and decision-makers. Often work done for one virtual environment must be completely replicated for another. The creation of robust, procedural actions that can be ported from one simulation to another would ease the creation of new virtual environments. As walking is useful in many different virtual environments, the creation of natural looking walking is important. In this paper we present a system for producing more natural looking walking by incorporating actions for the upper body. We aim to provide a tool that authors of virtual environments can use to add realism to their characters without effort. |

|

(1999-2001) The work presented here is an extension of previous PAR work , which uses natural language input to dynamically alter the behaviors of agents during real-time simulations. In that architecture we were able to give both immediate instructions and conditional instructions to the agents. However, we were not able to give negative directives or constraints, such as ``Do not stand in front of the car door'' or ``Do not walk on the grass.'' In order to successfully carry out these type of instructions, we need a planner which can dynamically alter an agent's behavior based on constraints imposed by new instructions. |

|

NSF: EMOTE: Synthesis and Analysis of Communicative Gesture (1999-2001) We have been building a system called EMOTE to parameterize and modulate action performance. It is based on a human movement observation system called Laban Movement Analysis. EMOTE is not an action selector per se; it is used to modify the execution of a given behavior and thus change its movement qualities or character. The power of EMOTE arises from the relatively small number of parameters that control or affect a much larger set.

|

|

ONR: Virtual Environments for Training (1996-2001) Virtual Environment Training (VET) is a computerized training system that allows peace keeping forces (military, police, SWAT teams, etc.) to train for peacekeeping missions using an immersive virtual-reality system. The current system allows a trainee to train in a roadblock situation - a roadblock has been set up to try to find a suspected criminal. |